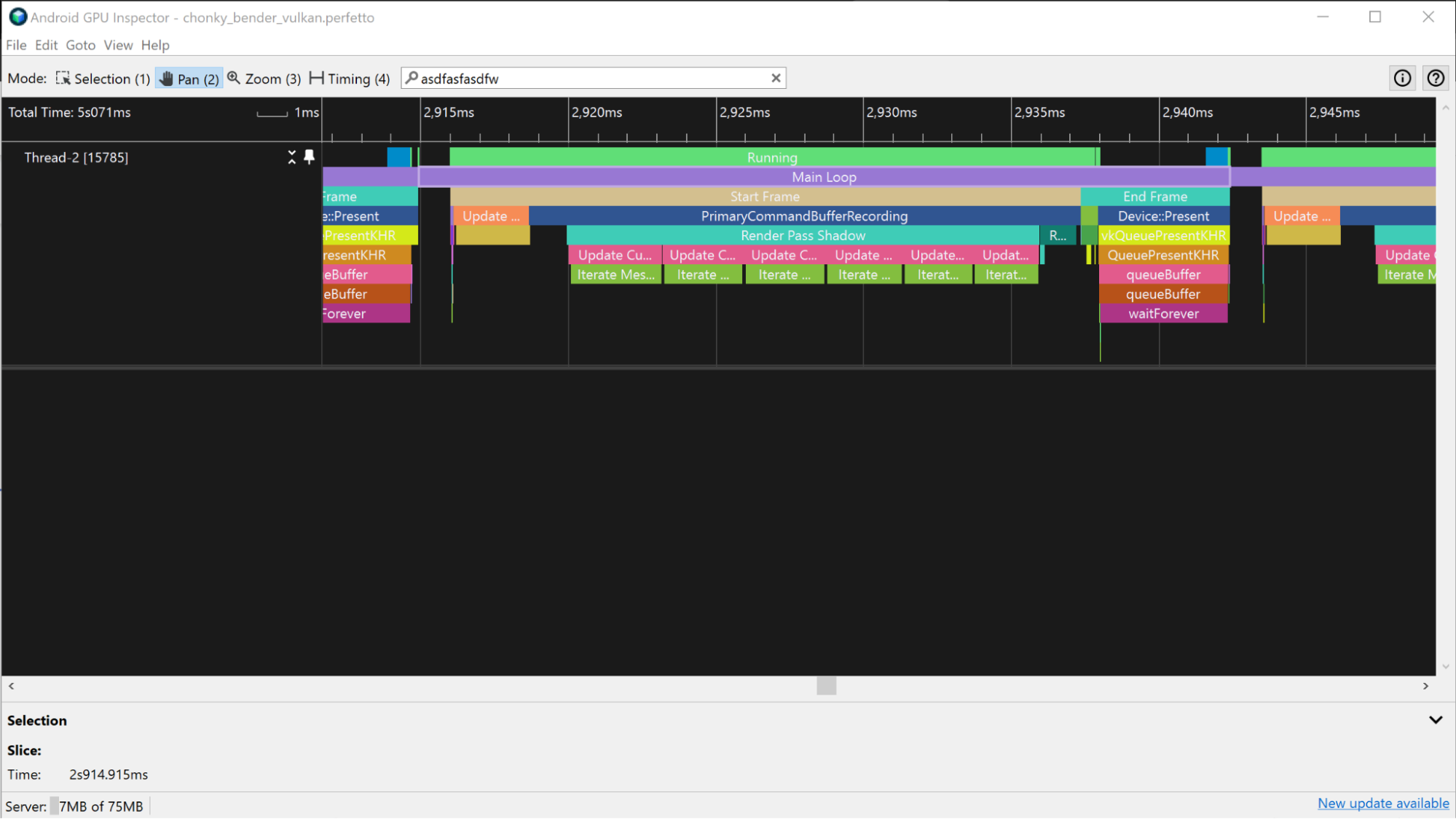

CPU execution/dispatch time dominates and slows down small TorchScript GPU models · Issue #72746 · pytorch/pytorch · GitHub

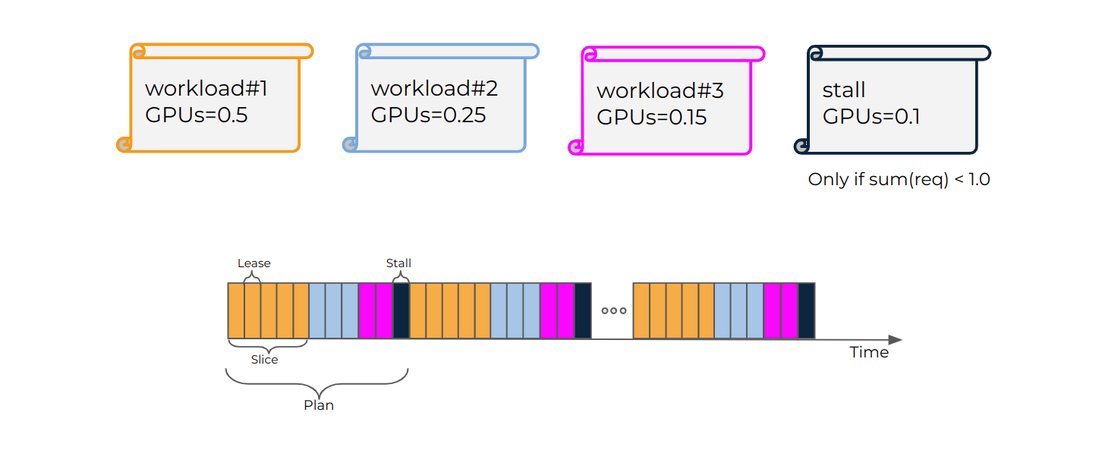

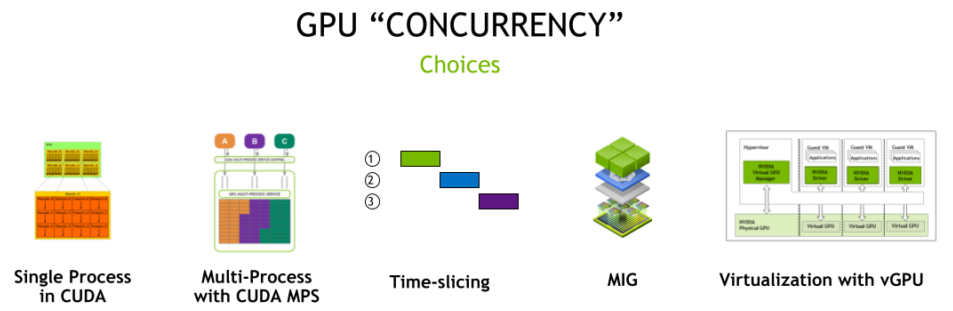

VMware vSphere 7 with NVIDIA AI Enterprise Time-sliced vGPU vs MIG vGPU: Choosing the Right vGPU Profile for Your Workload - VROOM! Performance Blog

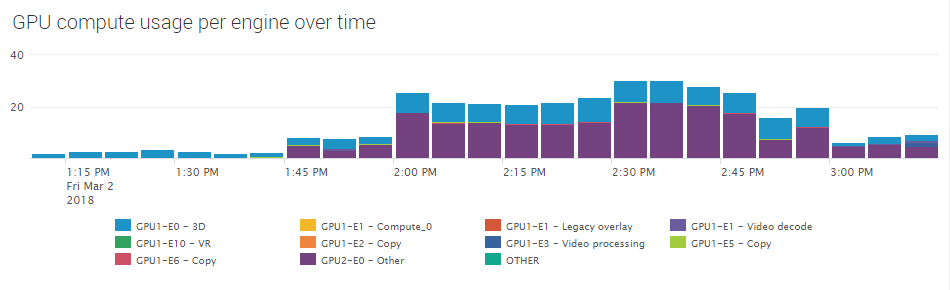

Monitoring GPU Usage per Engine or Application • DEX & endpoint security analytics for Windows, macOS, Citrix, VMware on Splunk